Aggregation level of the evaluation

Introduction

On a number of points, the SEP offers room for different interpretations of how to approach the evaluation. This particularly applies to the indicators and the aggregation level. Researchers often prefer to evaluate at the level of smaller research units, because they can immediately identify with them. Many administrators, meanwhile, prefer to evaluate at a higher aggregation level, not only because this is clearer and takes much less time, but also because there may be other advantages to this, such as promoting interconnectedness within the larger research unit or showing how interdisciplinarity is shaped or stimulated.

The choice of aggregation level has implications for the breadth and depth of the evaluation. For example, evaluating at a low aggregation level makes it difficult for the visitation committee to form an opinion about a unit’s viability, financial and personnel policy, etc., all the more so if (the majority of) research funding and policy are coordinated by larger units. Assessing at a high aggregation level may mean that the mission, strategy and results of the units below become more or less invisible, and those units may be unable to implement the outcomes of the evaluation in practice if they have become too general/abstract.

In addition, there is also the option of evaluating across institutional boundaries; doing a national evaluation for a specific domain, for example, as was the case for linguistics in the 2010s. Although a comparative assessment such as this may provide specific information, it is complex, not least because the assessment process needs to provide sufficient room to highlight the position, mission and strategy of the individual units in order to reflect the spirit of the SEP 2021-2027.

Options

The key is to strike a good balance. The evaluation should be both workable and effective, with the goal of the SEP playing a guiding role. The approach should ensure that the evaluation provides all those involved with sufficient insights and information.

This can be achieved with the following two models:

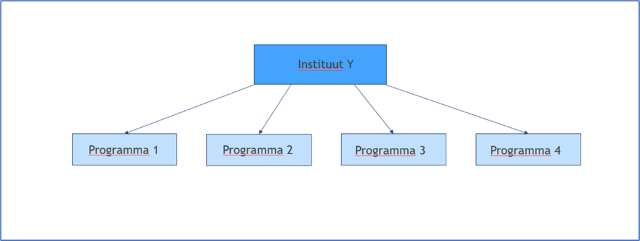

- Evaluation at the level of the institute, school or programme.

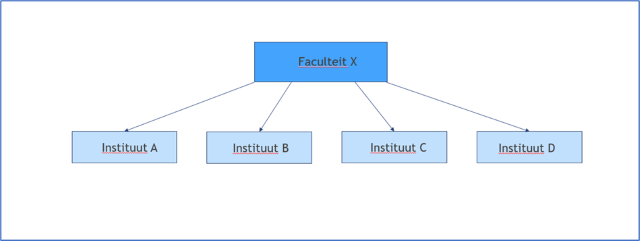

- The embedded or umbrella model, evaluating at the level of the institution or faculty, including institutes, programmes, etc.

When it comes to independently evaluating institutes, research schools or programmes, the SEP provides instructions on the desired scope and basic principles. In any case, the aggregation level should be sufficient to allow meaningful statements to be made about strategy and viability. It is evident that independent evaluations should pay attention to the relationship with (other units within) the overarching organisation, especially when it comes to shared policy, e.g., in relation to HRM or PhDs.

The advantage of choosing a higher aggregation level, in the form of an embedded or umbrella model, is that an organisation’s various dimensions and levels can be highlighted in conjunction with one another. The model consists of two layers: a self-evaluation of the larger, overarching unit (faculty, large research institute) and the self-evaluation(s) of the underlying components (e.g., at the level of research institutes or units). (text continues after the diagrams)

The overarching self-evaluation addresses all of the issues shared by the underlying units, such as financial and administrative policy, HRM, academic culture, PhDs, etc. This is particularly useful for creating a realistic picture of viability; moreover, as mentioned above, it saves a lot of work. This layered approach still allows a school/institute/programme to take part in a national visitation if it nevertheless decides to do so.

Finally, there is one last key advantage to doing an embedded evaluation: this approach is better suited to using the SEP as a tool in the quality assurance cycle.